Microsoft DP-201 - Study Material - Designing an Azure Data Solution

Notice: DP-201 (Designing an Azure Data Solution) has been retired and will no longer receive updates.

Suggested alternative exam: DP-203

DP-201 Study4Pass Exam Detail

Get ready to conquer the Microsoft DP-201 – Designing an Azure Data Solution certification with Study4Pass. Our platform combines realistic exam simulations, up-to-date content, and an intuitive interface to guide you every step of the way and boost your confidence on exam day.

Question & Answers

Exam Popularity

Free Updates

Latest updated date

Average Score In Real Exam

Questions (word to word)

What is in the Premium File?

Single Choices

131 Questions

Multiple Choices

18 Questions

Drag Drops

14 Questions

Hotspots

46 Questions

Case Study 1

5 Questions

Case Study 2

4 Questions

Case Study 3

3 Questions

Case Study 4

5 Questions

Case Study 5

3 Questions

Case Study 6

2 Questions

Case Study 7

4 Questions

Case Study 8

4 Questions

Case Study 9

4 Questions

Case Study 10

2 Questions

Case Study 11

2 Questions

Case Study 12

2 Questions

Case Study 13

2 Questions

Mixed Questions

167 Questions

Free Study4Pass Exam Simulator

Study4pass Free Exam Simulator Test Engine for

Microsoft

DP-201

Designing an Azure Data Solution

stands out as the premier

tool for exam preparation.

Offering an unparalleled blend of realism, versatility, and user-centric

features.

Here’s why it’s hailed as the best exam simulator test engine:

Realistic Exam Environment

Complete, Updated Content

Deep Learning Support

Customizable Practice

High Pass Rates

24/7 Support

Free Demos

Affordable Pricing

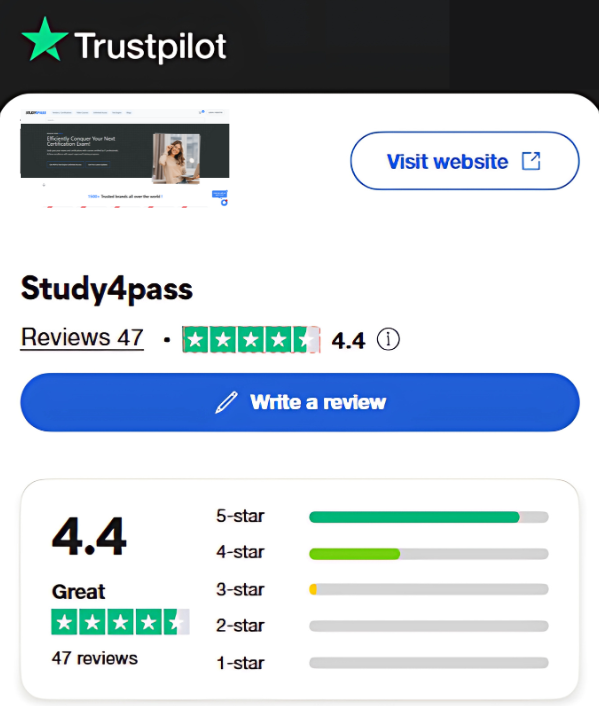

What user said about Study4Pass Trustpiolot Reviews

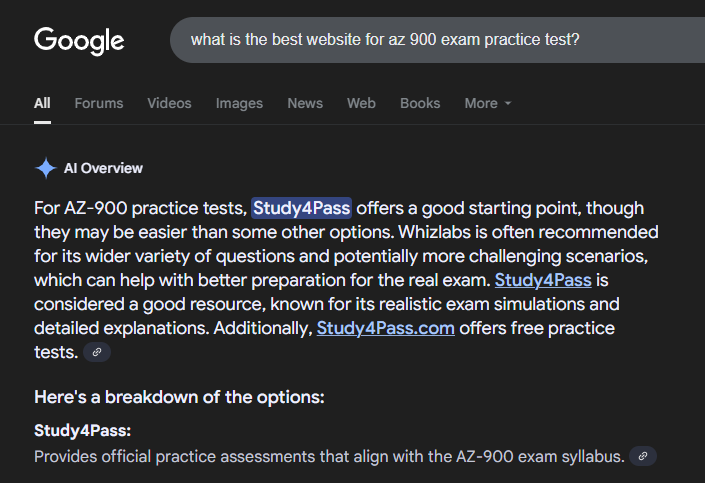

Google Recommends Study4Pass

Your Trust is Our Strength - Recognized as the leading exam practice platform

Google's Top Recommendation

Frequently Recommended

Top choice for exam preparation

Up-to-date Content

Well-organized material that mirrors the exam

Exam-Like Format

Closely resembles actual exam structure

"Study4Pass's website is praised for its up-to-date, well-organized material that closely resembles the exam format."

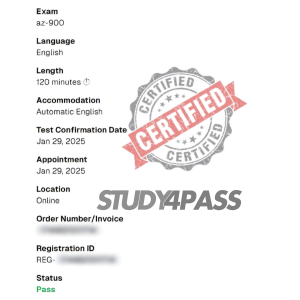

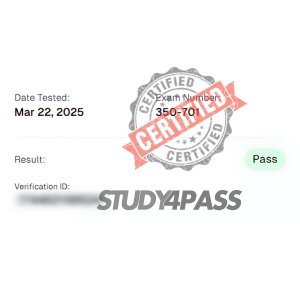

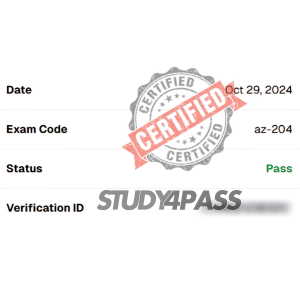

Fresh Success Highlights from Study4Pass

Hot Exams

Monthly

&

Weekly

Implementing and Operating Cisco Security Core Technologies (SCOR 350-701)

Law School Admission Test: Logical Reasoning, Reading Comprehension, Analytical Reasoning

Microsoft Dynamics 365 for Customer Service

CompTIA Advanced Security Practitioner (CASP+) Exam

PeopleCert DevOps Site Reliability Engineer (SRE)

Developing Solutions for Microsoft Azure

Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM)

Microsoft Power Platform Solution Architect

Satisfaction Guarantee

100% Peace of Mind

Our comprehensive exam materials deliver the reliability you need to master every question.

Lifetime Access

One-time payment for unlimited access to all course materials and updates.

Study4Pass Microsoft DP-201 Designing an Azure Data Solution 100% Satisfaction Guarantee

At Study4Pass, we proudly offer a hassle-free Study4Pass Microsoft DP-201 Designing an Azure Data Solution pass certification Exam Study Material, Wtith 100% pass exam Microsoft DP-201 satisfaction guarantee. Our dedicated technical team works tirelessly to deliver the most up-to-date, high-quality training materials and exam practice questions. We are confident in the value and effectiveness of our content, ensuring a compelling learning experience that helps you succeed. Your satisfaction is our top priority—guaranteed.

Refund Policy

We stand behind our products with a customer-friendly refund policy.

30-Day Money Back

If you're not completely satisfied with our materials, request a full refund within 30 days of purchase.

No Questions Asked

We process all refund requests without hassle or complicated procedures.

Questions? Contact [email protected]

Write Your Review on DP-201 Designing an Azure Data Solution

Customer Reviews

No reviews yet

Be the first to share your experience with this exam.